- Guideline

- Open access

- Published:

Statistical analysis of high-dimensional biomedical data: a gentle introduction to analytical goals, common approaches and challenges

BMC Medicine volume 21, Article number: 182 (2023)

Abstract

Background

In high-dimensional data (HDD) settings, the number of variables associated with each observation is very large. Prominent examples of HDD in biomedical research include omics data with a large number of variables such as many measurements across the genome, proteome, or metabolome, as well as electronic health records data that have large numbers of variables recorded for each patient. The statistical analysis of such data requires knowledge and experience, sometimes of complex methods adapted to the respective research questions.

Methods

Advances in statistical methodology and machine learning methods offer new opportunities for innovative analyses of HDD, but at the same time require a deeper understanding of some fundamental statistical concepts. Topic group TG9 “High-dimensional data” of the STRATOS (STRengthening Analytical Thinking for Observational Studies) initiative provides guidance for the analysis of observational studies, addressing particular statistical challenges and opportunities for the analysis of studies involving HDD. In this overview, we discuss key aspects of HDD analysis to provide a gentle introduction for non-statisticians and for classically trained statisticians with little experience specific to HDD.

Results

The paper is organized with respect to subtopics that are most relevant for the analysis of HDD, in particular initial data analysis, exploratory data analysis, multiple testing, and prediction. For each subtopic, main analytical goals in HDD settings are outlined. For each of these goals, basic explanations for some commonly used analysis methods are provided. Situations are identified where traditional statistical methods cannot, or should not, be used in the HDD setting, or where adequate analytic tools are still lacking. Many key references are provided.

Conclusions

This review aims to provide a solid statistical foundation for researchers, including statisticians and non-statisticians, who are new to research with HDD or simply want to better evaluate and understand the results of HDD analyses.

Background

The goal of the topic group TG9 “High-dimensional data” (HDD) of the STRATOS (STRengthening Analytical Thinking for Observational Studies) [1] initiative is to provide guidance for planning, conducting, analyzing, and reporting studies involving high-dimensional biomedical data. The increasing availability and use of “big” data in biomedical research, characterized by “large n” (independent observations) and/or “large p” (number of dimensions of a measurement or number of variables associated with each independent observation), has created a need for the development and novel application of statistical methods and computational algorithms. Either large n or p may present difficulties for data storage or computations, but large p presents several major statistical challenges and opportunities [2]. The dimension p can range from several dozen to millions. The situation of very large p is the focus of TG9 and this paper. Throughout the paper, “p” will refer to the number of variables and the term “subject” will be used broadly to refer to independent observations, including human or animal subjects, or biospecimens derived from them; or other independent experimental or observational units. Researchers who design and analyze such studies need a basic understanding of the commonly used analysis methods and should be aware of pitfalls when statistical methods that are established in the low-dimensional setting cannot, or should not, be used in the HDD setting.

This overview, a product of STRATOS topic group TG9, provides a gentle introduction to fundamental concepts in the analysis of HDD, in the setting of observational studies in biomedical research. The focus is on analytical methods; however, issues related to study design, interpretation, transportability of findings, and clinical usefulness of results should also be considered as briefly discussed throughout this paper.

The STRATOS initiative and the STRATOS topic group TG9 “High-dimensional data”

The STRATOS initiative (www.stratos-initiative.org) is a large collaboration involving experts in many different areas of biostatistical research. The objective of STRATOS is to provide accessible and sound guidance for the design and analysis of observational studies [1]. This guidance is intended for applied statisticians and other data analysts with varying levels of statistical training, experience and interests. TG9 is one of nine topic groups of STRATOS and deals with aspects of HDD analysis.

Main issues addressed by TG9 often overlap with those of other TGs, but in the work of TG9 there is always a focus on the HDD aspect. Sometimes TG9 guidance will build upon that of other TGs to adapt it for relevance to HDD (see the “Discussion” section), but also completely new issues arise and require novel statistical approaches.

High-dimensional data are now ubiquitous in biomedical research, very frequently in the context of observational studies. Particularly omics data, i.e., high-throughput molecular data (e.g., genomics, transcriptomics, proteomics, and metabolomics) have provided new insights into biological processes and disease pathogenesis and have furthered the development of precision medicine approaches [3]. Rapidly expanding stores of electronic health records contain not only standard demographic, clinical, and laboratory data collected through a patient history, but also information from potentially many different providers involved in a patient’s care [4]. Data may be derived from multiple sources and can be represented in many different forms. Collectively, these data can be leveraged to support programs in comparative effectiveness and health outcomes research, and to monitor public health. Many statistical methods that are discussed here may be applied to health records data as well as to omics data, but our primary focus here is on the analysis of omics data.

Simultaneously, advances in statistical methodology and machine learning methods have contributed to improved approaches for data mining, statistical inference, and prediction in the HDD setting. Strong collaborations between data and computational scientists (e.g., statisticians, computational biologists, bioinformaticians, and computer scientists) and other biomedical scientists (e.g., clinicians and biologists) are essential for optimal generation, management, processing, analysis, and interpretation of these high-dimensional biomedical data [5].

Credibility and importance of research findings from biomedical studies involving HDD can be better judged when there is understanding of various approaches for statistical design and analysis along with their strengths and weaknesses. While this overview directly aims to improve understanding, simultaneously this guidance implies what information is necessary to report to fully appreciate how a study was designed, conducted, and analyzed. Whether study results prompt further pre-clinical or early clinical work, or translation to clinical use, ability to judge quality, credibility, and relevance of those results is critical. It is important to avoid sending research programs down unproductive paths or allowing flawed research results such as poorly performing prognostic models or therapy selection algorithms generated from HDD to be implemented clinically [6]. Historically, research involving biomarkers and prognostic modelling has been criticized for lack of rigor, reproducibility, and clinical relevance [7,8,9,10], and for poor reporting [11, 12]. At least as many deficiencies are also common in biomedical research involving HDD. The goal of STRATOS TG9 is to reduce these deficiencies, and improve rigor and reproducibility, by providing widely accessible didactic materials pertinent to studies involving HDD.

Study design

In any observational study, including in the HDD setting, study design plays a crucial role in relation to the research question. A first important point is the precise definition of the target population and the sampling procedure. The subjects included in a study (or biospecimens derived from them) may be selected from the population by a random or other statistically designed sampling procedure (e.g., case–control, case-cohort), or may simply represent a “convenience” sample. It is therefore important to understand whether the subjects are representative of the target population, how the variables associated with subjects were measured or ascertained, and whether there are potential confounding factors. Failure to account for confounding factors or minimize bias in subject or variable ascertainment can lead to useless or misleading results.

Outcome-dependent sampling is rather common in observational studies, particularly for those investigating risk factors for relatively uncommon diseases or outcomes. Examples include classical matched or unmatched case–control designs along with two-phase sampling from a cohort (case-cohort or nested case–control). Another often-used strategy oversamples long survivors, or, for continuous outcomes, subjects with high and low values of the outcome variable. When any such sampling strategies are employed, it is important to use inferential procedures [13, 14] that properly account for the sampling design.

Laboratory experiments generating high-dimensional assay data should adhere to the same best practices as traditional controlled experiments measuring only one or a few analytes, including randomization, replication, blocking, and quality monitoring. Arguably, careful design might be even more important in the setting of HDD generation because HDD assays may be especially sensitive to technical artifacts. Even when a study is primarily observational yet involves analysis of stored biospecimens using omics assays, good design principles should be followed when performing the assays. Best practices include randomizing biospecimens to assays batches to avoid confounding assay batch effects with other factors of interest. For unmatched case–control studies, balancing (randomizing) cases and controls into batches may provide important advantages for reducing the influence of batch effects [15]. For matched case–control studies or studies involving analysis of serial specimens from each subject, grouping matched or longitudinal sets within the same assay batch can be a convenient way to control for batch effects.

Another fundamental aspect of design is sample size, which refers to the measurement of different subjects, which are referred to as biological replicates. Whenever there is interest in making inference beyond an individual subject, e.g., assessing differential gene expression between groups of subjects with different phenotypes or exposed to different conditions such as treatments, biological replicates are required. In the HDD setting, standard sample size calculations generally do not apply. If statistical tests are performed one variable at a time (e.g., differential expression of each gene comparing two groups), then the number of tests performed for HDD is typically so large that a sample size calculation applying stringent multiplicity adjustment would lead to an enormous sample size. Alternative approaches to controlling false positive findings in HDD studies are discussed in section “TEST: Identification of informative variables and multiple testing.” If the goal is to develop a risk or prognostic model using HDD, typical recommendations about the number of events required per variable break down [16]. Other sample size methods that require assumptions about the model are challenging to implement considering the complexity of models that might be used in HDD settings [17, 18], as discussed in section “PRED2.4: Sample size considerations.” In reality, HDD studies are often conducted with inadequate sample size, which is an important reason why many results are not reproducible and never advance to use in practice [19].

It is important to distinguish technical from biological replicates. Technical replication refers to repeating the measurement process on the same subject. It should not be confused with sample size. Technical replicates are useful for evaluating the variability in the measurement process, which may be comprised of multiple steps each potentially contributing to the total error in the measurement [20] (Fig. 1) described the many steps in gene expression microarray analysis of mouse brains. Technical replication could theoretically be carried out at any of those steps. Sometimes measurements are repeated using an alternative non-high-throughput measurement technique (e.g., RT-PCR assay to measure expression or Sanger sequencing of a specific gene) as a form of measurement validation, but this must not be confused with other forms of validation such as clinical validation of a prediction model (see section “PRED2: Assess performance and validate prediction models”). In presence of budget constraints, if the goal is to compare different biological conditions, it is advisable to invest in biological replicates. When biological samples are inexpensive compared to the cost of the measurement process, pooling is sometimes recommended as a way to reduce costs by making fewer total measurements [21]. However, caution is advised, as assumptions may be required about assay limits of detection or the correspondence between physical pooling and additivity of measurements [22]. The context of any technical replication must be carefully described along with any methods of summarizing over replicates in order to interpret results appropriately.

Correlogram of 12 male-specific genes expressed as log-counts-per-million from 69 lymphoblastoid cells derived from male (29) and female (40) Yoruba individuals. Variables (genes) are reordered to emphasize the similarity among of their relations. Lower triangle: correlations shown by color and intensity of shading; upper triangle: by circle filled proportionally to the correlation strength. Given the symmetrical nature of a correlogram, often different representations are used for the lower and the upper triangles. Source for the data [27]

Design of a study should ideally be placed in the context of an overarching analysis plan. Each individual study should be designed to produce results of sufficient reliability that its results will inform next steps in the research project.

Methods

Structure of the paper

This paper is organized with respect to subtopics that are most relevant for the analysis of HDD, particularly motivated by typical aims of biomedical studies but also applicable more generally. These subtopics are initial data analysis (IDA and Preprocessing, section “IDA: Initial data analysis and preprocessing”), exploratory data analysis (EDA, section “EDA: Exploratory data analysis”), multiple testing (section “TEST: Identification of informative variables and multiple testing”), and prediction (section “PRED: Prediction”). For each subtopic, we discuss a list of main analytical goals. For each goal, basic explanations, at a minimally technical level, are provided for some commonly used analysis methods. Situations are identified where performance of some traditional, possibly more familiar, statistical methods might break down in the HDD setting or might not be possible to apply at all when p is larger than n. Strengths and limitations of competing approaches are discussed, and some of the gaps in the availability of adequate analytic tools are noted when relevant. Many key references are provided. It should be noted that throughout this paper we are concerned almost exclusively with cross-sectional or independent observations rather than longitudinal observations.

Topics in the paper are organized into sections according to the structure summarized in Table 1, followed by a discussion of the importance of good reporting to improve transparency and reproducible research in the “Discussion” section and a summarizing discussion in the “Conclusions” section.

Results

IDA: Initial data analysis and preprocessing

Initial data analysis (IDA) is an important first step in every data analysis and can be particularly challenging in HDD settings. IDA is a term for all steps of data inspection and screening after the analysis plan and data collection have been finished but before the statistical analyses are performed [23, 24]. It focuses on understanding the context in which the data were collected, on data cleaning (see section “IDA1: Identify inconsistent, suspicious or unexpected values”), and on data screening (see section “IDA2: Describe distributions of variables, and identify missing values and systematic effects due to data acquisition”). Data cleaning refers to identifying and possibly correcting errors. Data screening includes reviewing the characteristics of the data that could affect the subsequent analysis plan, for example, describing distributions of variables, by checking assumptions required for model fitting and hypothesis testing, describing missing values, and identifying the need for adjustments of systematic effects due to data collection. Systematic effects may include batch effects that are caused, e.g., by different technologies used for collecting the data or even by different technicians performing laboratory experiments, see section “IDA3.2: Batch correction” for details. Further, initial steps may include simplification of data, e.g., by excluding or collapsing variables, if deemed appropriate. Insights about the data gained from these screening steps might lead to refinement or updating of an analysis plan to ensure that the data are consistent with any assumptions or requirements of the proposed analysis strategies (see section “IDA4: Simplify data and refine/update analysis plan if required”). However, IDA should always be conducted independently of the analysis needed to address the research questions, in order to avoid biasing conclusions.

The term “data preprocessing” is often used in biomedical research involving analysis of HDD, especially in the omics field, to denote certain initial data cleaning and screening steps falling within the more general category of “initial data analysis.” Data preprocessing refers to the process of transforming “raw” data, obtained directly from measurement instrument, into quantifications that are suitable for the subsequent statistical analysis. This includes detection and handling of incomplete, incorrect or inaccurate values, application of normalization methods that aim to remove systematic biases (e.g., assay batch effects), and transformations of variables [25].

A first step of the data cleaning and screening process is often to standardize the names or terms of variables and observations, especially for omics data compiled using different technologies. This type of standardization helps facilitate other, more complex downstream analyses and interpretation of results, as well as better online dissemination and archiving of data.

The IDA material is organized for ease of discussion, but the IDA process is typically iterative. Preprocessing is discussed in section “IDA3: Preprocessing the data,” but after preprocessing one may need to go back to the data cleaning and screening steps described in sections “IDA1: Identify inconsistent, suspicious or unexpected values” and “IDA2: Describe distributions of variables, and identify missing values and systematic effects due to data acquisition.” Note also that some model-based methods used for the identification of informative variables incorporate normalization into the data analysis model (see section “TEST: Identification of informative variables and multiple testing”).

IDA1: Identify inconsistent, suspicious or unexpected values

Identification and handling of incomplete, incorrect, or inaccurate values is logically a first step in IDA. Attention is directed toward distinguishing aberrant values that clearly originate from the data collection or generation process from those that might reflect true biological variability. Both visual and analytical inspections of the data are used for the detection of such values.

IDA1.1: Visual inspection of univariate and multivariate distributions

Graphical displays are helpful to both understand the structure of the data and detect potential anomalies. For HDD, it is rarely feasible to conduct a detailed examination of the distribution of every variable individually. Visual displays might be constructed only after variables of interest have been identified, for example because a gene is differentially expressed between two experimental conditions or because a particular variable is identified to have an unusual distribution by calculation of summary statistics or has an outlier. A practical alternative is to first calculate scores (summary statistics) for each variable or pair of variables, and then select both typical and interesting atypical variables, with respect to distributions of the scores, for more detailed inspection of their univariate or bivariate distributions. Types of scores to be used in these analyses should include those that capture specific features of the distributions, including measures of location, dispersion, skewness, kurtosis for univariate distributions, linear relationships for bivariate distributions, and metrics to detect outliers or influential values (Table 2).

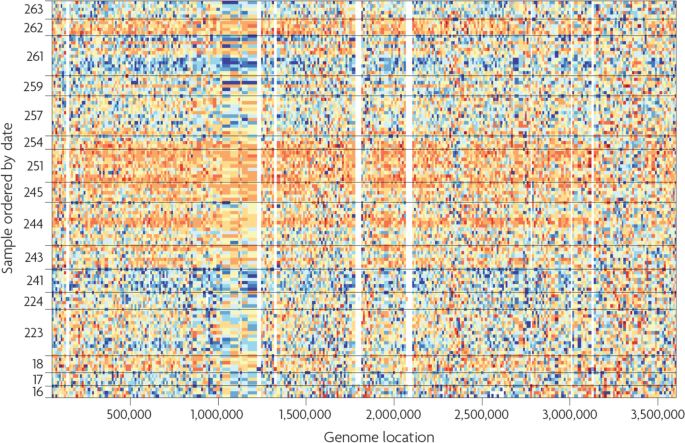

Example for a heatmap, which is a data representation in the form of a map in which data values are color coded. Here, sequencing data from the 1000 genomes project [30] are visualized. Rows correspond to samples and are ordered by processing date, and columns represent genome location of the corresponding sequence. One can see that for the dates 243–254, orange color indicating high values is overrepresented, compared to blue color indicating low values. This demonstrates that so-called batch effects are present, i.e. systematic biases in the data, which are discussed in detail in section “IDA3.2: Batch correction.” Source for the data: [29]

IDA2: Describe distributions of variables, and identify missing values and systematic effects due to data acquisition

IDA2.1: Descriptive statistics

For understanding the structure of data, often univariate measures for location and scale of the variables are informative. In the HDD setting, graphical display is often helpful to scan these measures across the large number of variables, both for detecting anomalies in the data and for a general exploration of variable distributions and their consistency with assumptions required for certain analysis methods. An example of the use of boxplots and of smooth histograms for exploratory purposes can be found in [31].

Standardization of data values is often performed prior to data analyses. Typically, this refers to normalization with respect to scale and location (e.g., subtract mean or median and divide by standard deviation). This can be helpful to give variables similar weight, especially if they are measured on different scales. However, standardization removes information about absolute magnitude of effects, so it should not be used when the actual magnitude of differences is of interest (e.g., differences in mean expression values between two groups). Another caution is that HDD will typically contain a certain number of variables that are uninformative because they do not vary much across observations, with variability essentially reflecting noise in the data. Standardization of such variables can exaggerate the noise to give these variables undue influence in analyses that is on par with that of truly informative variables. It is often preferred to drop such uninformative variables at the start of analyses (Table 3).

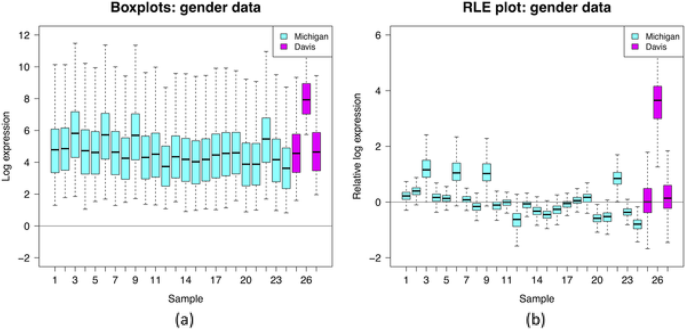

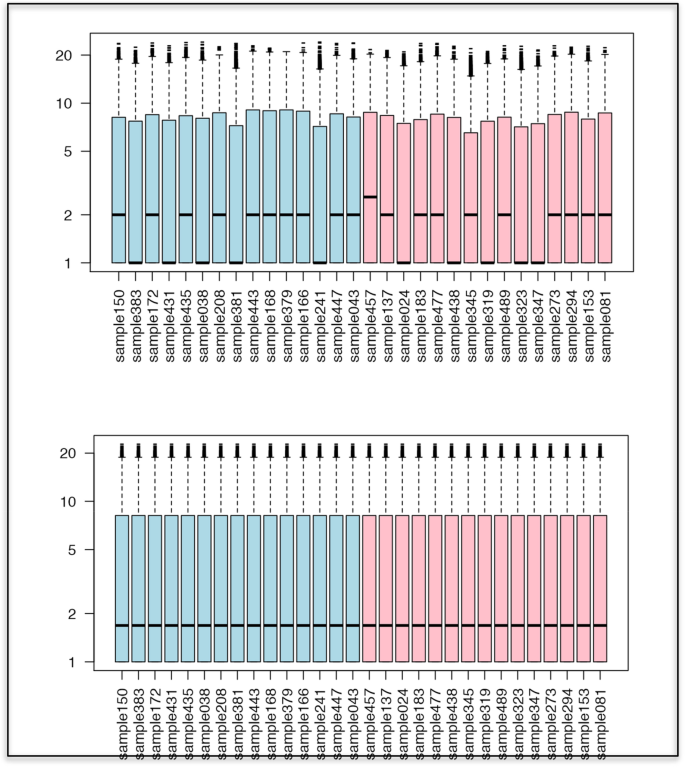

Visualization of the insights obtained from an RLE plot, representing (a) log gene expression distributions for 27 samples (without performing quantile normalization) and (b) relative log gene expression distributions for the same 27 samples. The RLE plot allows to highlight the unwanted variation due to the between-batch variation (cyan versus magenta boxplots) as well as the within-batch variation as suggested by both the difference in location (median further from 0) and spread (higher IQR) of the boxplots. This interpretation is under the often-plausible assumption that expression levels of most genes are unaffected by the biological factors of interest. Source: [32]

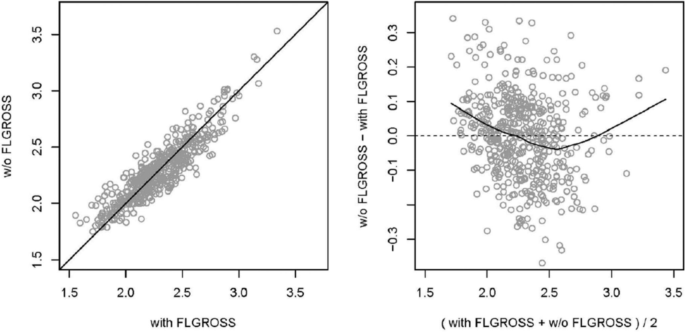

Comparison of a scatterplot (left) and a Bland–Altman plot (right, also MA plot for omics data) of the same data. In this example, the predicted values of two regression models (including and excluding a variable called FLGROSS) are compared. The scatterplot shows similar values for most observations, with points close to the diagonal. The Bland–Altman plot, with differences on the y-axis (on log-scale for MA plots on omics data typically log-ratios), better visualizes the dependence on the average value of the predictions (typically average log intensity for MA plots). The smoothing line in the example Bland–Altman plot indicates the shape of dependence of the differences on the average values. Source: [35]

IDA2.2: Tabulation of missing data

Missing values are ubiquitous in real-world data and may have major implications for choice of analysis methods and interpretation of results [36]. In fact, most multivariable and multivariate analyses methods have as their default requirement that values of all variables are available for all subjects, i.e., all observations are “complete.” An important early step in any analysis is tabulation of the missing values, i.e., the identification of the number of missing values per subject and per variable, respectively, to provide an overview of the missing data structure. In multi-omics integrative studies, high-dimensional data from different data types are collected for the same subjects. In such studies, small sample size caused by experimental and financial constraints, which can also vary between data types, can be the reason for missing data, the absence of which has to be taken into account in the subsequent statistical analysis.

IDA2.3: Analysis of control values

Laboratory assay measurements can be affected by technical artifacts related to factors such as reagent lots, equipment drift, or environmental conditions. Sometimes these artifacts can be detected, and potentially adjusted for, through use of control and reference standard samples, which have expected distributions of measurements. For single-analyte assays, a calibration process is typically performed to adjust raw measurements and produce final reported values (Table 4).

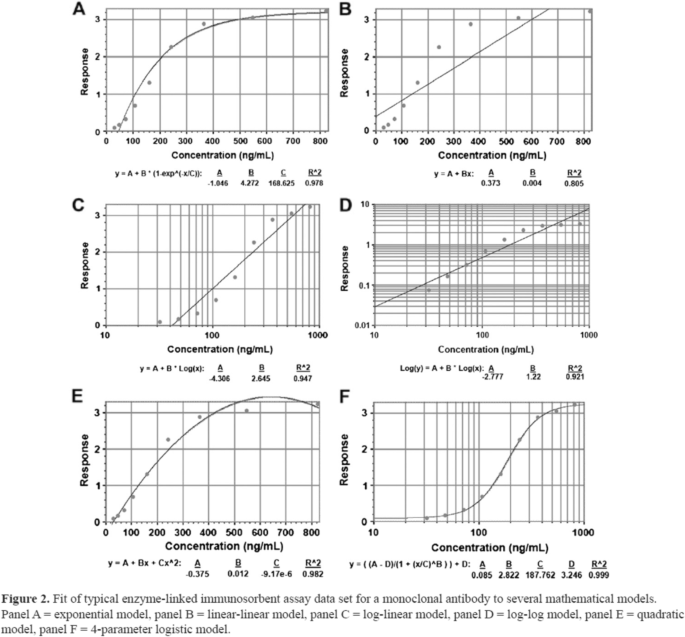

Visualization of calibration curves, representing the relationship between values of an analyte measured on a set of samples by some experimental assay (y-axis) and values obtained for those samples from some reference assay that is considered to represent truth and to be measured with negligible error (x-axis). The curve may be inverted to correct values obtained from the experimental assay to bring them closer to values of the analyte that would have been expected from the reference assay. Source: [37]

IDA2.4: Graphical displays

Systematic artifact effects arising from data acquisition processes can often be detected with graphical displays that visualize the data in a comprehensive manner. A widely used graphical representation for multivariate data is a principal components plot, which is also useful in exploratory data analysis, as described in section “EDA: Exploratory data analysis” (Table 5).

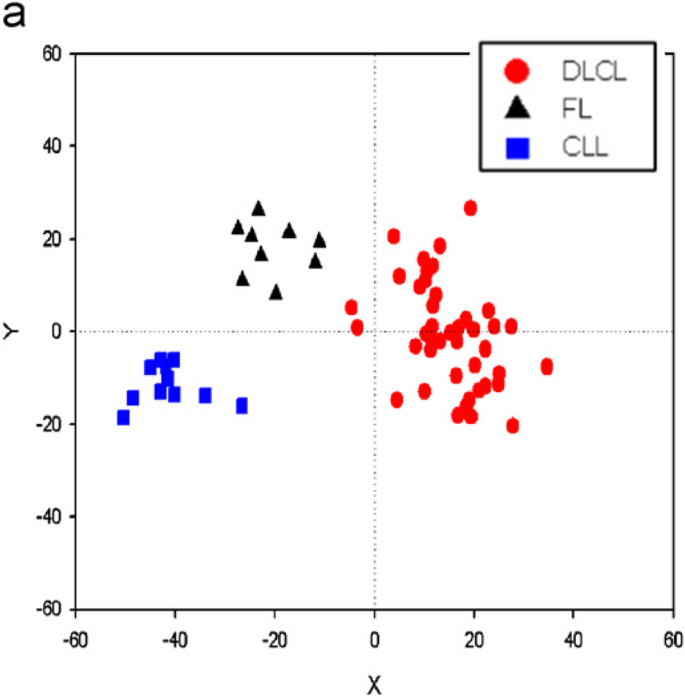

Principal component analysis plot depicting 62 lymphoma samples represented by their first and second principal component calculated from gene expression profiles comprising expression levels of 4026 genes on each lymphoma sample. The samples have been annotated in the plot according to pathologic subtype: 11 B-cell chronic lymphocytic leukemia (B-CLL; blue squares), 9 follicular lymphoma (FL; black triangles), and 42 diffuse large B-cell lymphoma (DLCL; red dots). Source: [39]

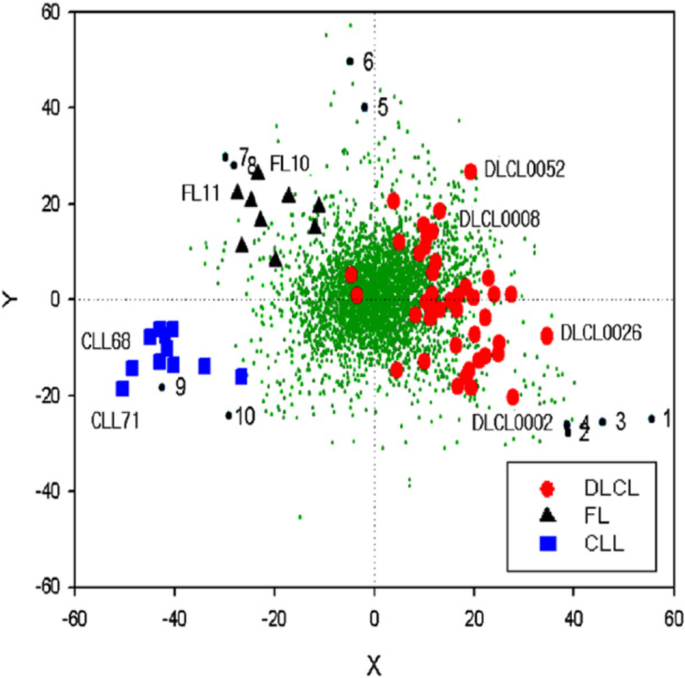

Biplot constructed by superimposing a PCA plot of 62 lymphoma samples (see Fig. 6) onto a PCA plot of genes where first and second principal component for the genes are calculated from gene expression profiles comprising expression levels of the 62 samples for each gene. Genes are represented in the plot as small green dots. Genes representing the three classes well are indicated by numbers. Source: [39]

IDA3: Preprocessing the data

Data generated by omics assay technologies typically require preprocessing by specially tailored methods that are based on understanding of the sophisticated instrumentation and scientific underpinnings of the technologies. Omics data are some of the most frequently encountered HDD in biomedical settings and are the focus in this paper. However, similar challenges exist with other types of HDD in biomedical research. Notably, high-dimensional imaging data are becoming commonplace, with examples including those generated by digital radiography, PET scans, and magnetic resonance imaging. In the following, we explain the main principles of data preprocessing using omics data examples.

Omics technologies are highly sensitive to experimental conditions and can exhibit systematic technical effects due to time, place, equipment, environmental conditions, reagent lots, operators, etc. In general, the first step of preprocessing aims to obtain an “analyzable” signal from the “raw” measurements. Subsequently, the signal is separated from possible systematic technical effects. The corrected signal may then be transformed to fulfill certain distributional properties, e.g., approximating a normal distribution. Note that sometimes the transformation may be applied before correcting the signal.

Preprocessing aimed at removal of systematic effects is often conducted as a separate step, as part of the IDA process, before the statistical analysis for answering the research question is undertaken. If the data have already been corrected for systematic effects and retain only the signals of interest (e.g., treatment effects), then the preprocessed (“normalized”) measurements for the biological samples can be analyzed using statistical methods that are easily accessible to researchers. However, conducting normalization as a separate step has important disadvantages. For instance, the normalized values are estimates and often carry with themselves some uncertainty, which should be taken into account in the analysis of the normalized data. However, this complicates the statistical analysis.

If inferential analysis is of interest, e.g., when comparing groups of samples to assess for biological differences, then a preferred approach is to consider normalization as part of a comprehensive statistical analysis model. The model is then used both to remove systematic technical differences and to quantify biological effects of interest (e.g., treatment effects). In that case, the uncertainty related to the normalization part of the analysis is naturally included in the estimates of uncertainty (standard errors) of the quantities of biological interest.

IDA3.1: Background subtraction and normalization

Omics data are prone to perturbations due to systematic effects induced by the measurement technology, also referred to as the assay platform. Many of these effects are unique to the assay platform, but there are some commonalities. A biological sample may have its gene expression profile measured using a single microarray or gene chip or its protein profile measured using a mass spectrometry system. The set of experimental conditions that gives rise to profiles such as these will be referred to here as an experimental run. However, even for the same sample, measurements obtained in different runs may differ due to factors such as different amounts of biological material input to the measurement system, settings on the instrumentation, environmental conditions in the laboratory, and so forth. These “between-run” differences may confound the “between sample” comparisons of scientific interest. Thus, these nuisance run effects should be removed to allow valid comparisons among data obtained in different runs. A generic preprocessing step aimed at removing between-run differences is often termed normalization. Even before normalization methods are applied, data generated by omics technologies generally require correction to subtract background noise from measurements to reveal their signal components. In Table 6 we introduce some basic methods for background subtraction and normalization.

Boxplots representing artificial distributions of values for 30 samples (subjects), before quantile normalization (top) and after quantile normalization (bottom), showing that all distributions are fully aligned with each other after the transformation. Source: [44]

IDA3.2: Batch correction

Another example of a systematic effect that is common to many technologies is a “batch effect.” The effect may arise when groups of biological samples (“batches”) have something in common in the way they are processed, e.g., same day or time of day, on same instrument, same operators, but these aspects are different for other groups of samples. Besides these measurement conditions, factors at play prior to measurement can cause batch effects. For example, clinical centers might differ in their standard operating procedures for processing, handling, and storing biospecimens, giving rise to pre-analytic factors that could influence downstream measurements. Patient characteristics, co-morbidities, or concomitant medications could additionally vary by batch, and may give rise to different distributions of measured values that have biological basis. Batch effects are widespread [29]. The challenge for batch correction is removal of nuisance effects such as those due to pre-analytic or technical factors while not inadvertently removing true biological differences. To facilitate appropriate correction, batch information such as dates, instrument, operator, and specimen collection sites should be recorded and patient factors might need to be taken into account in analyses. Above all, it is critical to avoid poor study designs in which important patient characteristics (including outcomes) are confounded with nuisance batch effects, as this could make it impossible to remove nuisance batch effects adequately.

Preprocessing of omics data aimed at removal of the aforementioned artifact effects poses several challenges. For instance, normalization is often data-driven and uses methods based on assumptions about the nature of the biological mechanisms. If those assumptions do not hold, then the methods might not work as intended. An example of a commonly made assumption in experiments involving genome-wide expression data is that most genes are not differentially expressed under the compared conditions. It may be challenging to verify whether such assumptions are correct.

The dependence of systematic effects on the platform raises an important issue for novel technologies, for which sources of measurement variation may not be fully established or understood. Out of convenience, preprocessing approaches developed for one platform have often been applied to other platforms. For example, normalization methods developed for microarrays are also used for proteomic [45] and metabolomic [46] mass spectrometry experiments. This might be reasonable in some settings, but the assumptions required for adequate performance of a normalization method should always be reviewed carefully for appropriateness prior to its application to another technology.

In addition, it is worth noting that preprocessing may need to be tailored to the analysis goals. For instance, it is problematic to remove batch effects when constructing a classification rule. This is because the measurements for a new sample presented for classification will most likely include effects from batches not represented in the data used to construct the classification rule. Consequently, a classification rule should be constructed using data that have not been batch corrected so that robustness to batch effects can be built in (Table 7).

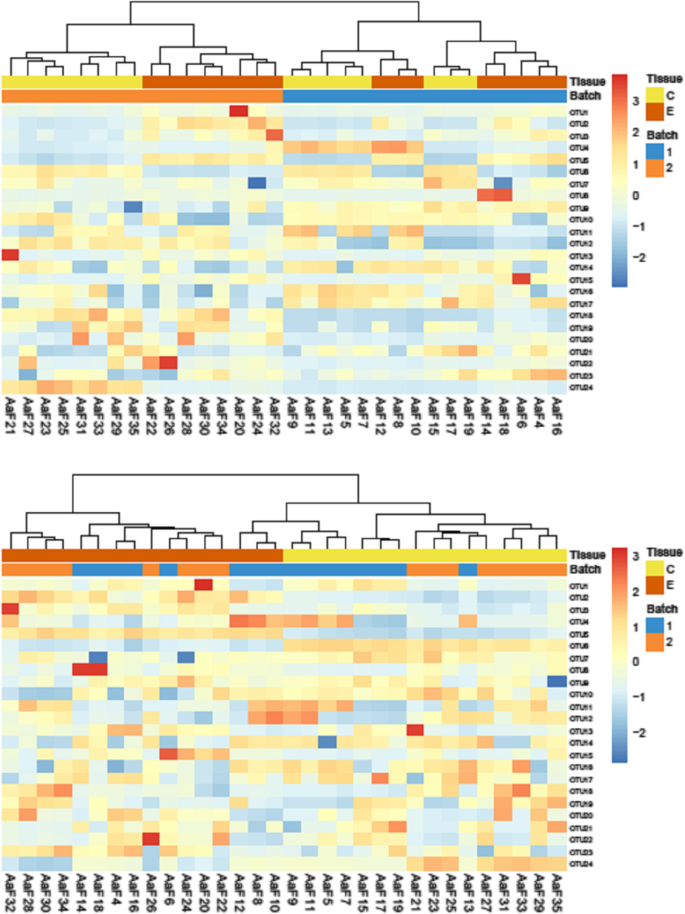

Visualization of the effect of batch correction. Heatmaps of hierarchical clustering of sponge metagenomics data studying two tissues types (C and E) with 2 batches, before and after Combat batch correction. Without batch correction (top figure), the clustering is mainly driven by the batch effect. After correction, the clustering is driven by the tissue type (bottom figure). Source: [49]

IDA4: Simplify data and refine/update analysis plan if required

The findings from the IDA steps can have substantial impact on the choice of appropriate analytical methods for subsequent statistical analyses. Therefore, the analysis plan should be refined or updated as necessary and according to the relevant findings from the IDA analysis [23].

IDA4.1: Recoding

Recoding primarily refers to transformations of the (original, raw) data, which allow for easier handling for a specific purpose. This is particularly useful in HDD settings, in which simple representation of the information can be challenging and sometimes even impossible due to the large number of variables (Table 8).

IDA4.2: Variable filtering and exclusion of uninformative variables

Variable filtering refers to the exclusion of variables that are considered uninteresting, before the statistical analysis to address the main research question is even started. This practice is widespread in HDD analysis where any steps to reduce the dimensionality and complexity of models at the outset are appreciated. If many irrelevant variables are filtered out, the multiple testing problem (see section “TEST: Identification of informative variables and multiple testing”) is diminished, and the statistical power of subsequent analysis steps can substantially increase. However, as discussed below, caution is required when applying certain filtering strategies that may introduce bias (Table 9).

IDA4.3: Construction of new variables

Sometimes it is useful to construct new variables as an initial step of data analysis by combining the variables that are available in the dataset in a meaningful way, using expert knowledge. For example, in medical studies investigating factors affecting health, often, overweight status is an important variable to consider in the analysis. Because weight and height must be considered together in assessing whether an individual is overweight, constructed variables like body mass index (BMI) have been used. The importance of fat distribution has also been recognized, and it has motivated the combined measured of waist-hip ratio (WHR). Instead of relying on the ability of statistical methods and algorithms to construct such variables implicitly, e.g., during a statistical modelling process, it is useful to be informed by expert knowledge and to include these constructed variables directly into analyses.

Not all constructed variables are derived using expert knowledge. Some, like principal component scores (see section “IDA2.4: Graphical displays”), are constructed in an unsupervised manner meaning that they are constructed to capture features of the data based only on the explanatory variables without using dependent variables such as outcomes. These constructed variables are sometimes used as explanatory variables when building prediction models (see section “PRED: Prediction”), and they can also be used for exploratory data analysis (see section “EDA: Exploratory data analysis”). As discussed in section “IDA2.4: Graphical displays,” plots of (typically the first two) principal components are often helpful for detecting peculiarities in the data or problems such as batch effects. Some constructed variables are derived using outcomes or other dependent variables. Examples of outcome-informed constructed variables include supervised principal component [55], or partial least squares (PLS) scores (see section “PRED1.3: Dimension reduction” for further discussion). Sometimes new variables are constructed by discretization of continuous variables, but this practice is problematic and should generally be discouraged (Table 10).

IDA4.4: Removal of variables or observations due to missing values

The simplest approach to deal with missing data is a “complete case analysis.” That is, if a single variable is missing for an observation, the observation is fully excluded from the dataset. Basing analyses on only complete cases at best only leads to loss of statistical power, but at worst can lead to substantially biased analyses. Impact of missing data will depend on how many cases have missing data, how many variables have missing values, how many values are missing, and whether the likelihood of missing values in a variable is related to the value of that variable or other variables. When few observations have missing values for few variables, then the impact on results of subsequent analyses may be limited, but when the number is large, the impact can be substantial.

A typical strategy for dealing with missing data is to exclude variables from the analysis that have a large number of missing values. Obviously, the possible relevance of such variables is neglected. Only when the missingness (the events that lead to a value being missing) is independent of both unobserved and observed values, i.e., the data are missing “completely at random” (MCAR), are the results of the complete case analysis (using likelihood-based methods) unbiased. When missing values depend on the unobserved values themselves (e.g., it is more likely that the measurement of a variable is missing when the value of the biomarker is very high or very low), then the missing values are said to be “missing not at random” (MNAR), and the resulting complete case analysis is biased.

Between the two extreme situations of MCAR and MNAR, there is a third possibility: missing values are called “missing at random” (MAR), when the missingness is independent of the unobserved values after controlling for the other available variables. One way to diagnose whether data are MCAR or MAR is to tabulate a missing value indicator against the values of other variables. As an example, if the value of a biomarker (e.g., gene expression level) is missing with higher frequency in males than in females, but within these strata, the missing values are missing completely at random, then it is likely a situation of MAR and not MCAR.

In HDD settings, when a large number of variables must be considered, complete case analysis may require exclusion of too many observations. To avoid this, common approaches involve first removing variables for which more than a specified percentage (e.g., 5 or 10%) of observations are missing and then removing observations for which more than a specified percentage (e.g., 2%) of variables have missing values. For studies with more complex designs, additional considerations may apply. For example, it is common in case–control studies to remove variables for which there is larger imbalance (e.g., more than 5 or 10% difference) in the percentage of missing values between cases and controls.

IDA4.5: Imputation

For MAR situations, methods more sophisticated than complete case analyses or dropping variables are recommended to use the information from all observations in the study and obtain less biased results. An example method is multiple imputation, which is described below. Although imputation is a useful strategy, it should be understood that no single approach for dealing with missing data is fully satisfactory. Thus, the best approach is to carefully select variables that are both informative and feasible to collect when designing studies and then work diligently to collect those data as completely as possible in order to minimize the amount of missing information. In the context of LDD, a framework for the treatment and reporting of missing data was proposed [58].

For HDD data, performing a simple multivariable regression in high dimensions is typically not feasible. Therefore, most procedures for handling missing data in the HDD setting either involve a phase for selecting for imputation only those variables that are deemed important or trying to use some regularized regression [59] instead of standard multivariable regression. The handling of missing data in HDD settings is an active topic of research. Many tailor-made imputation algorithms have already been developed; for an early overview in the context of for gene expression measurements, see [60] (Table 11).

EDA: Exploratory data analysis

When performing statistical analyses, it is important to distinguish between exploratory data analysis (EDA) and confirmatory data analysis, as this has important consequences both for the selection of appropriate analytical methods and for the correct interpretation of the results. The starting point for confirmatory analysis is a hypothesis to be evaluated, whereas, in EDA the goal is to provide an unbiased view of the data. Insights from EDA may then lead to development of new hypotheses that can be evaluated in subsequent confirmatory analyses on independent data.

Caution is necessary when performing statistical inference (e.g., feature selection as described in section “TEST: Identification of informative variables and multiple testing”) or model performance assessment following EDA when decisions to remove or modify observations from the analysis might depend on the observed relationships one is trying to confirm. For example, if outlier observations are removed from a dataset, the performance of a prediction model built only on the remaining observations is most probably an overly optimistic estimate of what the model performance would be on an independent dataset, which might contain different outliers.

Two major analytical goals for EDA are (1) to identify interesting data characteristics such as variables with extreme values, associations between variables, or representative subjects with usual values of variables, and (2) to gain insight into the structure of the data. Note that many of the methods used in EDA are also applied in IDA (like PCA; see section “IDA2.4: Graphical displays”). In this section, we focus on methods that are more specific to EDA. Note that many of the methods described in this section are generally designed and suitable for continuous data; only some can also be applied for discrete data.

EDA1: Identify interesting data characteristics

EDA can assist a researcher to identify interesting data characteristics that may lead to generation of specific scientific hypotheses that can be more fully evaluated in subsequent studies. Through EDA, a researcher might identify variables exhibiting extreme values or study subjects (observations) having extreme values of one or more variables or unusual combinations of values of two or more variables. EDA might also reveal intriguing associations between variables (e.g., levels of a certain protein tend to differ between two phenotypic classes). The two main classes of exploratory methods for identifying such interesting data characteristics are graphical displays and inspection of descriptive univariate and multivariate summary statistics. Graphical displays are discussed in sections “IDA2.1: Descriptive statistics,” “IDA2.4: Graphical displays,” and “EDA1.1: Graphical displays,” whereas descriptive statistics were already described in section “IDA2.1: Descriptive statistics” as tools for the initial data analysis (IDA). It should be noted that due to the potential for identification of many false positive signals in the HDD setting, findings from large-scale comparisons of descriptive summary statistics are often tempered by application of multiple testing methods as described later in section “TEST: Identification of informative variables and multiple testing,” even though the original intent was exploratory analysis.

To identify interesting data characteristics in low-dimensional data via visual or graphical methods, it is usually possible to inspect simple summary statistics and graphical displays of distributions of variables one, two, or three at a time, but for HDD this approach quickly becomes infeasible. For instance, the number of scatterplots for all pairs of p variables is p(p − 1)/2, which already exceeds 1000 when p exceeds 45. Visual identification of interesting characteristics of HDD typically requires specialized graphical displays or reduction of data dimensionality.

EDA1.1: Graphical displays

As mentioned in section “IDA2.4: Graphical displays,” one can use principal components (PCs) for exploratory analysis by first summarizing the information included in all variables through calculation of PC scores (which are linear combinations of the original variables) and then plotting in two or three dimensions the first several PC scores that capture the majority of variability in the data. This may allow identification of clusters of observations or individual observations with unusual configurations of variables warranting further inspection.

Another goal for HDD visualization is to produce a display in lower dimensions that preserves the distances (more generally degrees of “dissimilarity”) between observations such that the closest points remain the closest and the furthest remain the furthest. Alternative data reduction techniques have been developed to achieve this goal. These methods aim to translate the data in such a way that dissimilarities among points in the lower-dimensional space are as proportional as possible to those quantified in the original (high-dimensional) space. One such technique, multidimensional scaling, is described below. A variation of multidimensional scaling not discussed here is correspondence analysis, which is suitable for categorical variables and shows the relationships between variables based on data specified in a contingency table. Cox and Cox [62] provide descriptions of both multidimensional scaling and correspondence analysis (Table 12).

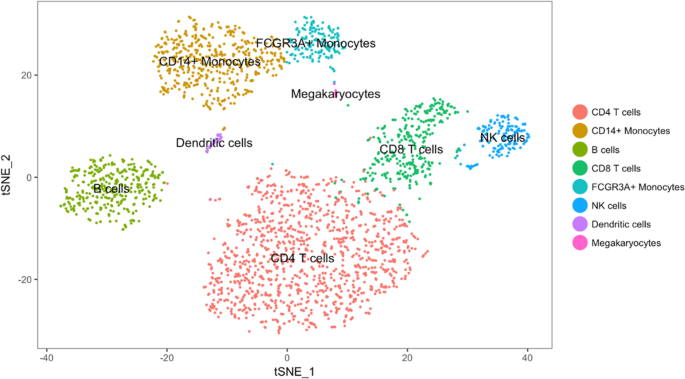

Two-dimensional visualization of a high-dimensional dataset using t-SNE. The dataset consists of 2700 single cells (peripheral blood mononuclear cells) that were sequenced on an Illumina NextSeq 500. The dataset is freely available from 10X Genomics. Points are colored by cell type. The plot shows that the cell types are locally well separated. Source: [71]

EDA2: Gain insight into the data structure

A global depiction of data to identify structure, including patterns or motifs, is another major goal of exploratory data analysis for HDD. Here, data structure is understood in a general sense, it refers to many aspects of the data that concern the arrangement or interrelation of the observations or variables of a dataset. Although a natural first step is to look at marginal distributions (e.g., univariate and bivariate) of all variables across observations, this approach is generally not feasible for HDD for reasons discussed above. Further, some structure may involve many different variables and not be discernible by examination of univariate, bivariate, or even trivariate distributions.

The data visualization techniques described in section “EDA1.1: Graphical displays” are often supplemented with additional approaches geared toward detection of certain kinds of structure, for example clusters. The goal of cluster analysis is to identify subgroups of observations or variables that are similar to each other, but different from others. Identification of prototypical observations to characterize each cluster might be of interest. The structure might also be multi-level. In this section, we focus on techniques that are useful to uncover structure that might be missed by examining only marginal distributions or low-dimensional representations of HDD.

EDA2.1: Cluster analysis

The goal of a cluster analysis is to assemble objects (observations or variables) into subgroups, termed clusters, such that similarities between members within the clusters are high (or, equivalently, distances are small), compared to similarities between members from different clusters. Sometimes, the goal is only to find dense, i.e., heavily populated, regions in the data space that correspond to modes of the data distribution. Alternatively, there may be interest in fully characterizing the structure. Cluster analyses typically require choice of a similarity metric (or, alternatively, distance metric) for pairs of objects (sometimes also for pairs of clusters), a clustering algorithm, and a criterion to determine the number of clusters. Some clustering approaches that have been successfully used for low-dimensional data, e.g., mixtures of low-dimensional parametric probability distributions such as multivariate normal mixtures, either cannot be applied at all or perform very poorly in the HDD setting. Approaches not suitable for HDD are not further discussed here.

For comparing similarity of objects (either variables or observations), the Pearson correlation coefficient or Euclidean distance are the most popular metrics. The Pearson correlation does not depend on the scale of the variables, but the Euclidean distance does. If each of the variables characterizing an object is first standardized across the set of objects (subtract mean and divide by standard deviation), then use of Pearson correlation and Euclidean distance metrics will produce equivalent results. The measure should be chosen deliberately. If only relative levels of the values are important, then Pearson correlation is suitable, but if absolute values matter, then Euclidean distance is appropriate. It is important to note that both metrics tend to be more heavily influenced by a few large differences or deviations than by a series of small ones because the values are squared. An important modification of the Pearson correlation is the Spearman (rank) correlation, where values of observations are first replaced by their corresponding ranks before calculating the Pearson correlation. With this adjustment, the results are less heavily influenced by extreme data values.

In high-dimensional spaces, data are typically quite sparse. This means that distances between objects become large, a phenomenon often referred to as the curse of dimensionality. Therefore, the distance metrics may be prone to exaggeration by a few distant objects. Strategies to help avoid this problem include use of data reduction or variable selection before clustering (see section “IDA2.4: Graphical displays” for graphical displays for dimension reduction and section “PRED1.2: Variable selection.” for variable selection and dimension reduction in the context of improving prediction models).

Clustering algorithms can be divided into hierarchical and partitioning methods. In hierarchical clustering, observations are iteratively grouped together into larger clusters (agglomerative hierarchical clustering) or clusters are subdivided into smaller clusters (divisive hierarchical clustering). Centroid-based so-called partitioning algorithms aggregate the observations around specific points (the centroids) such that observations related to the same centroid are as similar as possible, and observations related to different centroids as different as possible. Hierarchical clustering algorithms provide a clustering for any number of clusters, whereas partitioning methods require an initial choice about the number of clusters present in the data. The most popular clustering algorithms are described in Table 13.

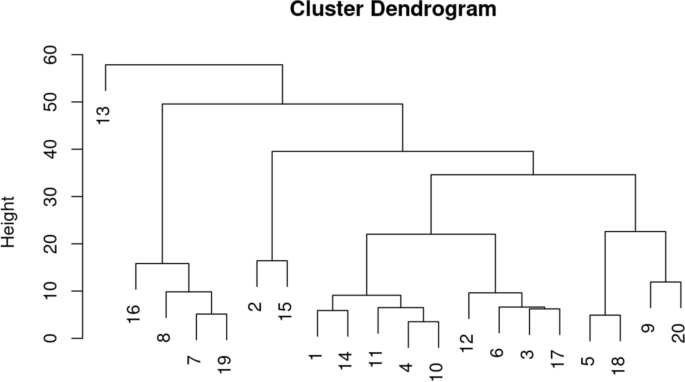

Hierarchical clustering result displayed in a dendrogram, where heights in the tree at which the clusters are merged correspond to the between-cluster distances. Source: [73]

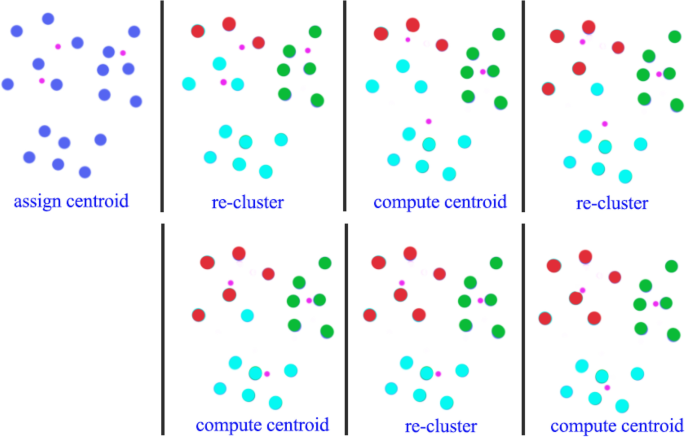

Visualization of the k-means algorithm with an example. Iteratively, observations are assigned to the cluster for which the squared Euclidean distance from the observation to the cluster centroid is minimized, and cluster centroids are computed based on the current cluster memberships. The iterative process continues until no observations are reassigned (as in the case of the last iteration in the figure). Source: [75]

Other methods for cluster analysis applied to biomedical data include fuzzy clustering and SOMs (self-organizing maps). In fuzzy clustering, objects can belong to multiple clusters. In SOMs (a type of neural networks first introduced by Kohonen [77]), a meaningful topology (special relationships) between the cluster prototypes is assumed. This means that the clusters can be visualized as a two-dimensional “map,” so that observations in proximate clusters have more similar values than observations in clusters that are more distant. Since the assumptions for SOMs are not guaranteed to hold, the interpretation can easily be misleading, such that SOMs should only be used by experts in this field. In addition, SOMs can be very sensitive to starting node configurations.

For HDD, the computer runtime of such partitioning algorithms can present a challenge. For example, PAM cannot be applied if the number of objects to be clustered is very large, i.e., for clustering variables in omics data or for clustering observations in large health records data. This challenge motivated development of the algorithm CLARA (Clustering Large Applications) [78], which works on subsamples of the data. Distribution-based clustering methods provide another alternative where probabilistic distributions for the observations within the clusters are assumed (e.g., multivariate Gaussian in each cluster, but with different means and potentially different variances). Parameters of the mixture distribution are typically estimated with EM-type (expectation–maximization) iterative algorithms [79]. However, not only, but particularly for HDD, the distributional assumptions are often difficult to verify and the algorithms may not converge to a suitable solution. Therefore, clusters might not be identified at all, or the results could be misleading due to incorrect assumptions about the data distributions.

Results produced by clustering algorithms are difficult to evaluate and often require subjective judgement. The validity of the results depends on the notion of a cluster, which varies between clustering algorithms, and this ambiguity carries through to estimation of the number of clusters (Table 14).

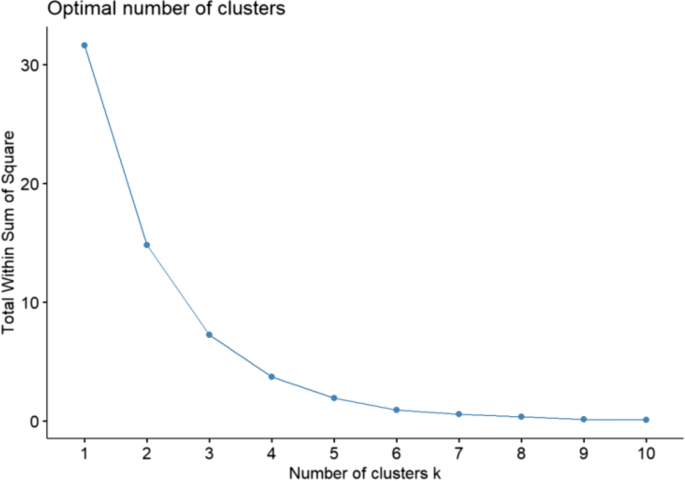

Example of a scree plot, which involves plotting some measure of within-cluster variation (here the total within sum of squares) on the y-axis and the number of clusters assumed in applying the algorithm on the x-axis. Source: [80]

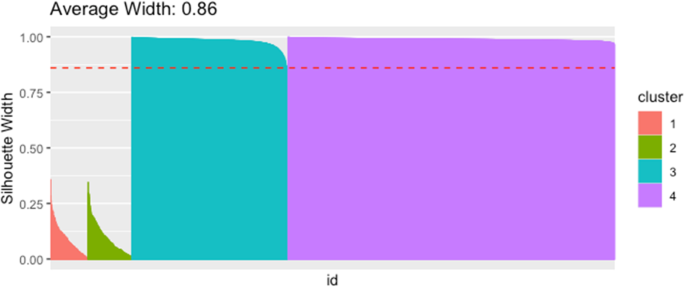

Silhouette values for observations that are grouped into four clusters. Observations are sorted along the x-axis by decreasing silhouette value, grouped by the four clusters. The silhouette values for the observations of the first two clusters have very low values, indicating two not well-separated clusters. Source: [82]

Some clustering methods have been specifically developed to handle the typical large storage requirements and long run times for HDD settings. For example, CAST (Cluster Affinity Search Technique) [83] is especially useful for large numbers of observations or variables. Iteratively, clusters are constructed as follows. Choose a randomly selected observation not already assigned to a cluster and assign it to a newly defined cluster. Then repeat the following two steps until the set of observations assigned to this new cluster no longer changes. Add unassigned observations with average similarity to the current cluster members above a predefined threshold, and remove observations with average similarity below this threshold.

Another method is subspace clustering [84], where first subsets of variables are identified (called subspaces) and clusters are determined by defining regions of values based only on these variables. Then, iteratively, lower-dimensional subspaces are combined to higher-dimensional ones. In biclustering (or two-way clustering), first introduced by Hartigan [85], simultaneously variables and observations are selected to generate clusters that do not depend on all variables at the same time. Again, heuristic and stable algorithms are required to find approximate solutions in acceptable time (see, e.g., [86]).

Many traditional clustering methods are best suited for continuous variables, but there are several examples of HDD that are not continuous. One example is count data such as generated by RNA-Seq. Some examples of clustering methods that have been specifically developed for count data include those of Witten [87] and Si et al. [88], which are based on Poisson or negative binomial distributions. Cluster analysis based on deep learning has also been proposed [89]. That approach trains a deep neural network, extracts the resulting hidden variables, and uses them as the basis for clustering using standard methods like k-means.

EDA2.2: Prototypical samples

Often it is useful to construct prototypical observations that represent subgroups of observations. Prototypical observations are, for example, identified by some clustering algorithms. The motivation is to allow visualization or provide a summary of relevant characteristics of subgroups of observations. These summaries can be interpreted in the biomedical context, for example as a description of the characteristics of a typical patient who responds well to a particular therapy. Prototypical samples can be selected as central observations in their respective subgroups, or they can be newly constructed. When applying a k-means algorithm to separate observations into K clusters, centroids of each cluster are natural choices for prototypes. Similar to the principles of many cluster analysis approaches (see section “EDA2.1: Cluster analysis”), the construction of prototypical observations is done such that they are simultaneously as similar as possible to the observations of the same subgroup (cluster) and as different as possible from the observations of the other subgroups. Bien and Tibshirani [90] provide a nice overview of available methods, although their review is limited to classification problems. Prototypical observations can also be used to represent classes and then to predict the class of a new observation based on the similarities with these prototypical samples (see also section “PRED: Prediction”).

TEST: Identification of informative variables and multiple testing

In HDD analysis, one is often interested in identifying, among a large number of candidate variables, “informative variables.” These are associated with an outcome or with a set of other phenotype variables that characterize the study subjects. For example, one might wish to characterize which single-nucleotide polymorphisms are more often present in patients who experience severe side effects from a particular drug compared to patients without severe side effects. In drug sensitivity screens performed on bacterial cultures, one might aim to identify bacterial genes with expression significantly associated with degree of sensitivity to a new antibiotic. When comparing individuals with a particular disease to healthy volunteers, one might wish to identify circulating proteins that are present in different abundance. In all these cases, evaluation of the associations might be accomplished by conducting many statistical hypothesis tests, one per candidate variable. This represents a multiple testing situation.

Multiple testing scenarios commonly encountered in biomedical studies with HDD are divided here into three categories. Scenarios that consider each candidate variable individually and perform a similar evaluation or statistical test for each include the following three cases: (i) Identification of variables among a set of candidates that are associated with a single outcome or phenotype variable, i.e., related to outcome or phenotype classes (categorical) or correlated with a continuous phenotype variable or time-to-event outcome. (ii) Identification of candidate variables with a trajectory over time affected by experimental factors or exhibiting a prescribed pattern. (iii) Identification of candidate variables that are associated with a prespecified set of other variables, i.e., where the candidate variables are considered as dependent variables and the set of prespecified variables as independent “predictor” variables. To illustrate the concepts, much of the discussion here will focus on a simple example of scenario (i) in which two classes are being compared with respect to a very large number of variables. Methods discussed for scenario (i) that can be extended straightforwardly to scenarios (ii) and (iii) are noted.

Scientific goals may go beyond simply providing a list of individual variables exhibiting associations with an outcome, a phenotype, a collection of prespecified variables, or patterns over time. Frequently, there is interest in more globally characterizing the variables that were included in the identified list. For example, genes are organized into interconnected biological pathways. Expression of two different genes might exhibit similar associations because they are both regulated by certain other genes, because one lies downstream of the other in the same biological pathway, or because their products serve similar biological functions. Established organizational structures might be described by gene taxonomies such as Gene Ontology [91], KEGG [92], or BioCarta [93]. Gene set enrichment analysis (see section “TEST3: Identify informative groups of variables”) refers to approaches that exploit these expected associations. They were first proposed in the omics field for use with HDD gene expression data. Although these enrichment analysis strategies could be applied in a variety of HDD settings, subsequent discussion of these methods will be based on examples with high-dimensional gene expression data for which the concept of enrichment is intuitively clear.

TEST1: Identify variables informative for an outcome

TEST1.1: Test statistics: Hypothesis testing for a single variable

Before discussing multiple testing procedures, it is helpful to briefly review basic concepts in statistical hypothesis testing involving a single variable. A hypothesis test aims to decide whether the data support or refute a stated “null hypothesis.” Typical examples of simple null hypotheses are that the distribution of a variable is not different between two or more groups or that a variable is not associated with another variable. A hypothesis test is based on some statistic that will reflect strength of evidence for or against the null hypothesis. Knowing the distribution of the test statistic (e.g., normal distribution or binomial distribution) allows one to construct a hypothesis test based on that statistic for which the probability of drawing an incorrect conclusion is controlled. Type I error refers to erroneously rejecting the null hypothesis when it is actually true. Type II error refers to failing to reject the null hypothesis when it is actually false. Statistical power is defined as one minus the type II error. In general, one wants to control the probability of a type I error, denoted α, at a small value, while maintaining acceptably high power (or low type II error). A conventional choice of \(\alpha\) for the single variable setting is 0.05, which means that the probability of a false positive decision, i.e., falsely rejecting the null hypothesis when it is true, is 0.05.

Hypothesis testing is often operationalized by calculation of a p-value from the observed data, which estimates the probability of observing a value of the test statistic that is at least as extreme as that observed, assuming that the null hypothesis was true. (Note the correct definition of a p-value stated here, in contrast to the common misinterpretation of a p-value as the probability that H0 is true). A significance test is performed by comparing the computed p-value to the prespecified α level. When the p-value is less than or equal to α (e.g., 0.05 in the conventional setting), the null hypothesis is rejected; otherwise, it cannot be rejected.

It should be mentioned that sometimes the goal of a scientific study is to estimate certain parameters of interest, for example means or correlations, rather than to test hypotheses. In estimation settings, it is generally desired to provide intervals of uncertainty, such as confidence intervals, to accompany parameter estimates. Although errors in hypothesis testing have some relation to confidence interval coverage probabilities, most of the multiple testing procedures discussed in this section are not readily applicable to multiple estimation. Multiple estimation procedures are beyond the scope of the present discussion.

The t-test is an example of a widely used statistical test for a single variable. It is the basis for the modelling approaches described below that are extensions of hypothesis testing to multiple variables. Extensions particularly developed for HDD include limma, edgeR, and Deseq2, as discussed in section “TEST1.2: Modelling approaches: Hypothesis testing for multiple variables.”

Calculation of a p-value usually requires assumptions about the distribution of the test statistic. Sometimes that distribution can be derived from assumptions about the distributions of the variables. For example, the statistic of the t-test can be shown to have a t-distribution when the variables are normally distributed, and the within-group variances are the same for the classes being compared. Similar requirements hold for F-tests in analysis of variance and statistics associated with standard linear regression analysis. Although one can never be certain if these assumptions hold for real data, many test statistics can be shown by theoretical arguments to have an approximate normal distribution when sample size is sufficiently large (referred to as “asymptotic” approximation). An example asymptotic property is that a t-statistic has an approximate normal distribution for large samples size, even if the data are not normally distributed. Nonetheless, extra caution is necessary in the setting of HDD where the requirements for sample size to qualify as “large” are far greater. Extremes of a test statistic’s distribution are particularly prone to departures from data distributional assumptions, and this is exactly where accuracy is needed most when calculating the very small p-values upon which many multiple testing procedures for HDD rely.

When validity of assumptions required for familiar statistical tests is uncertain, for example that the data follow a normal distribution for the t-test or F-test, alternative tests broadly referred to as nonparametric tests may be preferable. Wilcoxon rank sum (equivalent to Mann–Whitney U) and signed rank tests are nonparametric alternatives to the two-sample t-test and paired t-test, respectively; the Kruskal–Wallis test is an alternative to the F-test in one-way ANOVA. These nonparametric tests are robust to outliers and do not require data to be normally distributed; nor do they require that their distribution is fully characterized by two parameters in the way that a mean and variance characterize a normal distribution. Many nonparametric tests are based on ranks of observed data rather than their actual values. Permutation tests, as described in Table 15 and below, comprise another class of nonparametric tests and are more generally applicable than rank-based tests.

A word of caution is in order to emphasize that correct permutation of the data is critical to validity of a permutation test. The permutations must preserve any structure in the data that is unrelated to the null hypothesis. For instance, if the goal is to test whether the mean of a variable is different between groups, but it is thought that the variances are different, then the simple permutation test described for the two-group comparison will not be appropriate because the permutations will change the variances as well as the means. If the groups are paired, e.g., variables are measured both before and after each subject receives an experimental drug, then the permutations would have to preserve that pairing by randomly “flipping” the before and after measurements within patients. Correct permutation might not be easy, or even feasible, for regression models with multiple predictors. For example, naively permuting the outcomes in a logistic or Cox regression model with many predictors to provide test statistics for individual predictor variables (adjusted for the other variables) would not provide valid permutation p-values because the correlation structure of the data, e.g., correlations of the outcome with other variables that are not the focus of the test, would not be preserved. Anderson and Legendre [94] discuss appropriateness and performance of various permutation testing strategies in the context of testing partial regression coefficients in multivariable regression models.

Nonparametric methods have advantages and disadvantages. In the context of statistical tests, their main advantages include their applicability in situations where little is understood about the likely distribution of the data, and their robustness to oddities in the data such as outliers. The main disadvantage of nonparametric methods is their reduced statistical power, particularly for small samples sizes, compared to a parametric test when distributional assumptions of that test are actually met. For HDD settings, parametric tests have additional appeal, when reasonably justified, due to the possibility to “borrow information” across variables by modelling relationships of parameters (e.g., means or variances) across variable-specific distributions; modelling approaches such as those discussed in section “TEST1.2: Modelling approaches: Hypothesis testing for multiple variables” can greatly increase statistical power for testing multiple hypotheses.

TEST1.2: Modelling approaches: Hypothesis testing for multiple variables

In the scenarios (i)-(iii) described in the introduction of section “TEST: Identification of informative variables and multiple testing”, the number of statistical analyses performed is equal to the number of variables. For omics data, the number of variables is often in the range of tens of thousands or even millions. Direct application of standard hypothesis testing approaches to each variable in the setting of HDD is problematic. As an illustration, consider conducting several thousand statistical tests (one per candidate variable), each using the classical α level of 0.05 to test for significance of an association between a single variable and an outcome or phenotype of interest. If the truth were that none of the candidate variables had an association with the outcome or phenotype of interest, then, on average, testing 20,000 variables would lead to 1000 false positive test results (0.05 times the 20,000 variables tested), clearly an unacceptably large number that would limit interpretability of the results. Control of the number of false positives, often termed “false discoveries” in the setting of HDD, is critical.

Several challenges are encountered in multiple testing for HDD omics data. One is that in order to control false positives when a very large number of statistical tests are performed, small α levels must be used, which limits statistical power. Another challenge is the mathematical difficulty of dealing with joint distributions of certain variable types such as counts, which are commonly generated by newer omics technologies such as RNA-Seq. Furthermore, sample sizes are often insufficient to rely on classical statistical asymptotic (large sample size) theory to provide tractable approximate distributions of test statistics required to appropriately control type I and II errors. Finally, the classical approach of limiting false positives by controlling the overall probability of any false positive findings is overly stringent when extremely large numbers of tests are performed. These challenges have spawned a wealth of innovative statistical approaches for multiple testing with HDD, which are described in the sections that follow.

The earliest technologies for high-dimensional gene expression analysis based on microarray platforms quantified gene expression by fluorescence intensities. After logarithmic transformation, these continuous intensity values are typically well approximated by a normal distribution. Many of the early methods developed for statistical analysis of microarray data relied on normally distributed data, the simplest example being use of t-tests to identify lists of differentially expressed genes with varying degrees of type I error control. Sample size in these early studies was usually relatively small, making it difficult to adequately control false discoveries and still maintain sufficient statistical power. Some of these methods were ad hoc or limited to simple experimental settings such as two-group comparisons, but advances in statistical methodology led to improved approaches for the analysis of HDD gene expression data (Table 16).

Sometimes a researcher is interested in identifying genes for which expression is not different between conditions, opposite the more typical goal to identify differentially expressed genes. This requires reversing the usual role of the null and alternative hypotheses. However, since it is impossible to statistically rule out very tiny effects, the null hypothesis that is tested for each gene is that its effect is larger than some user-specified minimum size. When implementing this procedure to identify genes with negligible effect, mean parameter shrinkage functions must be turned off.

TEST2: Multiple testing

Methods described in the previous section provide useful approaches to improve statistical power for testing individual variables (genes) and to appropriately model commonly encountered omics data. However, a final step is required to control false positives in HDD settings. Several multiple testing correction methods and their utility for HDD are discussed in this section.

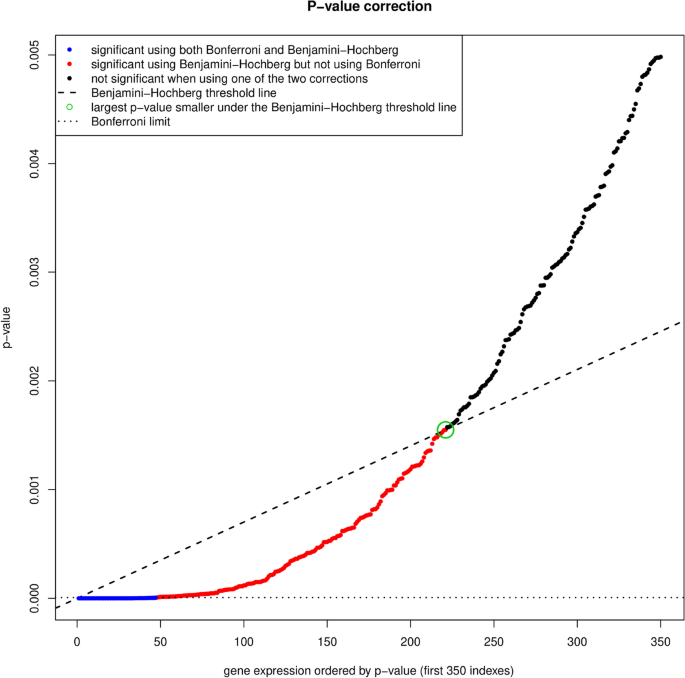

TEST2.1: Control for false discoveries: Classical multiple testing corrections

A simple table illustrates the types of errors that can be encountered in multiple testing [100]. When testing m hypotheses, these are either true or false, and either rejected or not rejected, yielding four possibilities, which are displayed in Table 17 along with the numbers of hypotheses falling in each category.